Apple officially stepped into the Artificial Intelligence market with the launch of its personalized AI services, known as Apple Intelligence. This groundbreaking move was unveiled at its Worldwide Developers Conference on June 10th, marking a giant step in Apple’s Artificial Intelligence journey.

Although Apple seems to be lagging behind compared to other tech giants such as Google, Microsoft and Meta in AI integration, this delay could be a strategic approach for Apple to learn from the mistakes of others and establish a standard to develop safer and more responsible AI products. According to CEO Tim Cook, “All of this goes beyond artificial intelligence, it’s personal intelligence, and it is the next big step for Apple”.

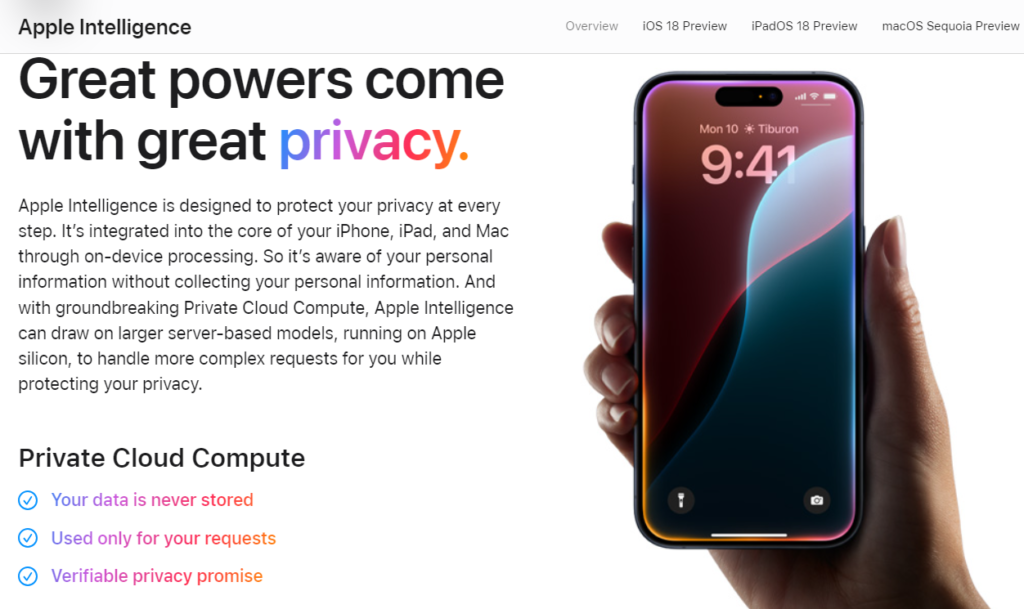

Apple’s senior vice president of software engineering, Craig Federighi, emphasized that ““Apple Intelligence” puts AI models right at the core of your iPhone, iPad and Mac and protects your privacy at every step”.

How does Apple protects your privacy at every step

Apple prides itself in its innovative approach to privacy protection. It has made significant investment into its custom-built Apple Silicon chips. These customized processors are so powerful that they enable users to run and process AI tasks right on their devices. Unlike with other products, Apple users do not need to rely on remote servers. This means that user data remains private and inaccessible to Apple itself.

However, due to the limitations of processing complex tasks on-device, Apple introduced its Private Cloud Compute (PCC) system. This groundbreaking cloud intelligence solution is designed specifically for private AI processing. The goal of PCC is to handle these complex tasks that require higher computational power but still maintain privacy by not retaining or sharing data to third parties including Apple.

To promote trust and transparency, independent experts can inspect the code that runs on Apple Silicon servers to verify the integrity of privacy measures. PCC uses cryptographic techniques to destroy information after the request process is completed. Leveraging hardware-based security measures such as Secure Boot and Secure Enclave Processors, Apple aims to provide a more secure environment for AI computations.

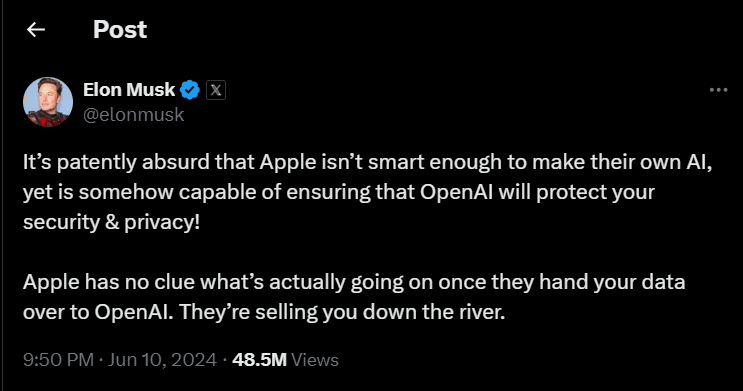

Apple went further to partner with OpenAI to integrate ChatGPT into its products to enhance the capability of its AI assistant and also increase its efficiency. This partnership has however received criticism from Elon Musk, who took to X to express his concerns.

However, Apple maintains that it has put in place privacy protections for when accessing ChatGPT from Siri and other writing tools. Users must grant explicit permission to utilize ChatGPT, and these requests will not be stored by OpenAI and the users’ IP addresses are obscured for added security.

While cybersecurity experts warn that the effectiveness of Apple’s privacy and security measures will depend on proper implementation and user education, the commitment to data privacy cannot be denied. As Apple releases its beta devices in the coming months, the AI and cybersecurity communities will pay close attention to Apple Intelligence to witness this innovative approach to data privacy. Apple is paving the way for companies to learn how to strike a balance between innovation and preserving user privacy in the rapidly evolving AI era.