Artificial Intelligence (AI) has provided numerous benefits which we believe is just the tip of the iceberg. On the other hand, AI has also put us in a serious predicament. Threat actors are constantly finding ways to exploit AI and carry out malicious attacks.

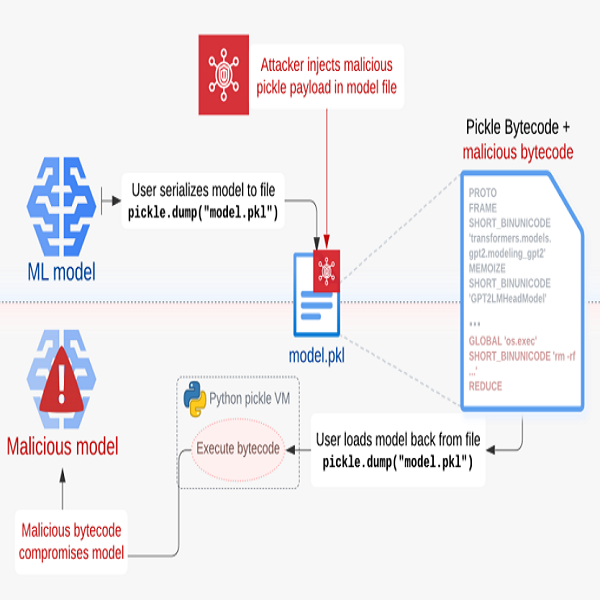

Recently, researchers at Trail of Bits discovered a new technique called Sleepy Pickle that can poison machine learning models by injecting malicious code into the serialization process. Serialization, also known as Pickling, is the process of converting a Python data object into a series of bytes for storage or sharing.

In her article on Securing AI-as-a-Service, Victoria Robinson gave insight into how Hugging Face’s infrastructure could be weaponized by simply uploading an Artificial Intelligence/Machine Learning model based on the Pickle format to its site. This report by Wiz researchers is a typical example of how the Pickle format can be exploited in a AI-as-a-Service platform. Despite the known security risk of the Pickle format, it is still widely used even by Hugging Face because it conserves memory, enables start-and-stop model training, and makes trained models portable and shareable.

However, this creates an opportunity for threat actors to insert malicious bytecode into the harmless picket file. Sleepy Pickle is different from most exploits as it aims to compromise the Machine Language model itself, so that it can target the organization’s end-users. Hacker news confirms that this is also more effective than directly uploading a malicious model to Hugging Face, as it can modify model behavior or output dynamically without having to entice their targets into downloading and running them.

How the attack works

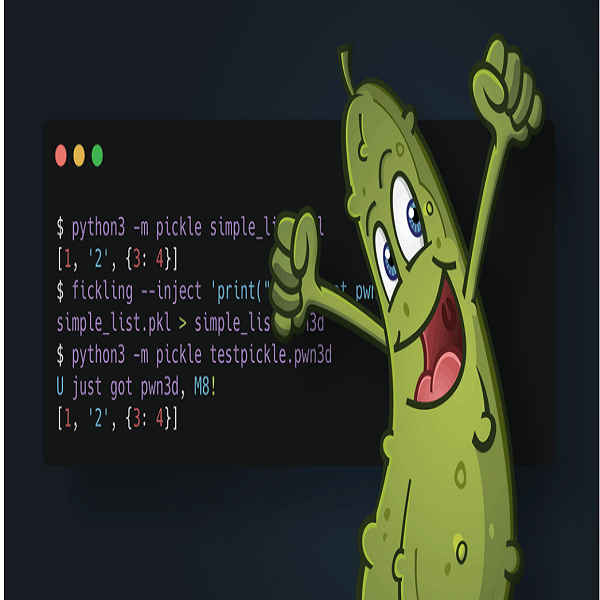

Researchers at Trail of Bits explained that the Sleepy Pickle works by creating a malicious pickle opcode sequence that will execute an arbitrary python payload during deserialization. The attacker then inserts this payload into a pickle file containing a serialised machine learning model. Next, the payload is injected as a string within the malicious opcode sequence using an open source tool like Fickling. This attack is then delivered to the target host through various techniques such as adversary-in-the-middle (AitM) attacks, phishing, supply chain compromise, or the exploitation of a system’s weaknesses.

One of the concerning aspects of this attack is that hackers don’t need to have local or remote access to a system, leaving no trace of the malware attack. It is also difficult to detect due to the small size of the custom malicious code. Sleepy Pickle can be used to escalate other attacks, such as spreading misinformation or harmful outputs, stealing user data or phishing users.

However, researchers suggested that controls like sandboxing, isolation, privilege limitation, firewalls and egress traffic control can prevent the payload from damaging the user’s system, stealing or altering user data. The best way to protect against Sleepy Pickle and other supply chain attacks is to use Machine Learning models only from trusted organizations and rely on safer file formats like SafeTensors. Unlike Pickle, SafeTensors deals only with tensor data, not Python objects, removing the risk of arbitrary code execution deserialization.

As the researchers at Trail of Bits stated, “Sleepy Pickle demonstrates that advanced model-level attacks can exploit lower-level supply chain weaknesses via the connections between underlying software components and the final application.”