You won’t believe that by using Generative AI, Threat actors can disrupt live calls between two people and consequently carry out an attack in real-time. Brace yourself as we dive into this insightful research.

Picture this scenario:

You need to make payment for some items. So you call the vendor on the phone to ask for their bank account number which they call out for you. A few minutes later, you make a transfer to that number. Days later, neither you nor the vendor can explain how the money ended up in a stranger’s account instead.

This is exactly what Chente Lee, Chief Architect of Threat Intelligence, IBM Security demonstrated in his report. Researchers at IBM Security modified a live financial conversation just like the one described above and were able to divert funds to a hacker’s account without the two victims being aware that their discussion was manipulated.

There are popular cases of deepfake audio of entire audio conversation, but this experiment focus on intelligently intercepting and distorting live calls to replace keywords based on the context.

Let’s dive right into how this AI attack works:

There has to be malware on the victim’s phone, or a compromised VoIP service, or even the hacker can initiate the call, to enable the attack. The team made use a program that acts as a Man-in-the-Middle attack to monitor the live conversation. The program also makes use of a text-to-speech and speech-to-text converter.

The star here is the Large Language Model (LLM) which has been trained in this case to identify the keyword “bank account”. On identifying the keyword in the conversation, the LLM changes that sentence to serve the attackers’ goal. Next, the program converts the modified text to audio using an already recorded deepfake voice. This is then played back for the recipient.

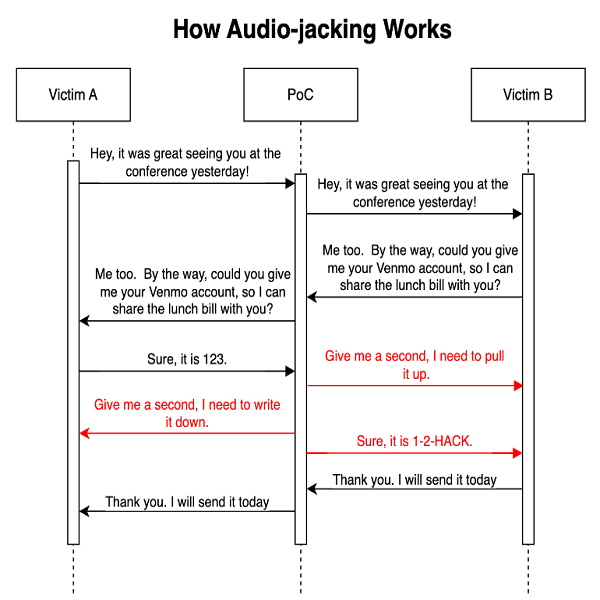

Let’s look at the attack process diagrammatically to better understand the experiment:

We notice from the diagram above that when the conversation between Victim A and Victim B flows naturally without an interruption from the program. At the point where the bank account number is being called, the interception occurs and the message is distorted. The receiver hears what the threat actors wants them to hear. In order to hide latency and keep the flow of conversation, LLM provides an intelligent sentence that seems normal. The conversation concludes with the real sentences from the victims.

This attack leverages generative AI’s capabilities brilliantly. LLMs easily understand context to provide suitable fake responses. They can modify any data type, not just financial information. And voice cloning fools recipients into perceiving an unaltered discussion.

The IBM Security team show how easy threat actors can disrupt live calls and turn victims into AI-controlled ‘puppets’. Although this may seem like a small use case, it just clearly shows the potential of generative AI to cause chaos in the hands of criminals.

The key takeaway from this is that although Generative AI is at its early stages, its capabilities are endless. This attack shows a combination of deepfake, phishing, man-in-the-middle tactics, and social engineering. Maintaining best security practices like using trusted services or software and sources, verifying sensitive requests, detecting social engineering. On a larger scale, policies and frameworks regulating generative AI usage are crucial defenses as these threats evolve.