Slack, one of the most popular workplace communication platforms, recently discovered a critical vulnerability in their AI Chatbot. The security flaw potentially allows hackers to gain unauthorized access into the system, retrieve data from private channels, and deliver phishing links. This potentially exposes sensitive information to unauthorized users within the same Slack workspace.

Slack AI is a set of generative AI tools built into the Slack platform that allows users to be more productive. These tools can summarize conversations, answer questions, and help users find information instantly. However, a flaw in the system could have enabled threat actors to manipulate the language model used for content generation.

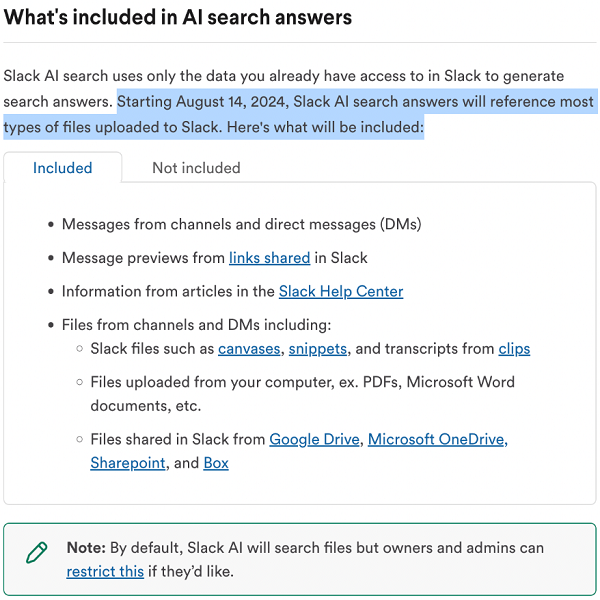

PromptArmor discovered that the problem is called Prompt Injection, specifically Indirect Prompt Injection, one of the many vulnerabilities of Large Language Models (LLMs). It is a technique where hackers can manipulate AI systems by inserting malicious instructions into the data the AI processes. This vulnerability became even riskier after August 14th, when Slack started including uploaded documents and Google Drive files in its AI processing.

The attack is quite clever. It starts with a user storing sensitive information, like an API key, in a private Slack channel. Meanwhile, an attacker creates a public channel with a hidden, malicious message. When a user asks Slack AI about their private information, the AI mistakenly includes the attacker’s public message in its search. The AI then follows the hidden instructions, presenting the user with a fake error message and a dangerous link.

If the user clicks this link, thinking it’s legitimate, their private information is sent directly to the attacker. What makes this attack very tricky is that Slack AI only cites the user’s private channel, making the response seem trustworthy.

Another version of this attack focuses on phishing. An attacker plants a malicious message in a public channel, designed to trick Slack AI. When a user asks the AI to summarize messages, perhaps from their manager, the AI includes the attacker’s message in its response. This can result in the user seeing a phishing link that appears to be part of the legitimate summary. While Slack AI sometimes cites the source of this injected content, the inconsistent behavior leaves room for exploitation in other instances.

In response, Slack stated that on August 20, 2024, a security researcher publicly disclosed this vulnerability. Slack quickly investigated the report and quickly deployed a patch the same day to address the issue. The company stated they have no evidence of any unauthorized access to customer data due to this flaw.

Slack’s prompt response to the vulnerability was impressive and reassuring. It also demonstrates how AI can be exploited by cyber criminals and used to carry out various cyber attacks. As AI becomes greatly integrated into our daily work tools, it’s crucial that we stay vigilant and aware of potential vulnerabilities. Stay up to date with information, exercise caution with sensitive information, and follow best security practices.