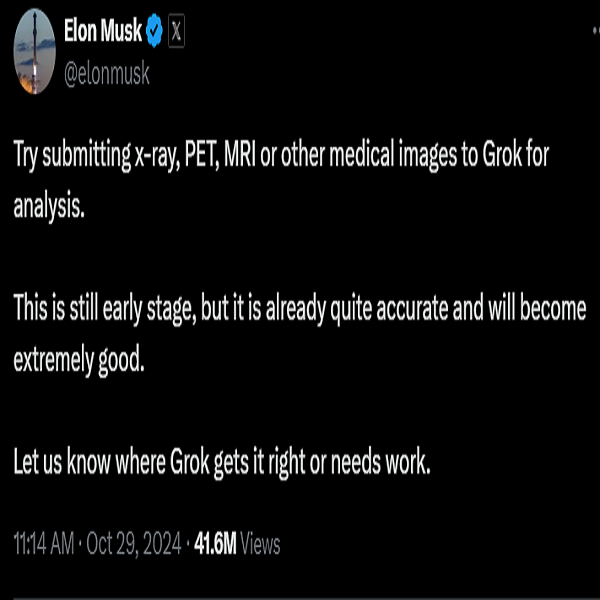

Artificial Intelligence (AI) is continuously advancing in numerous sectors and the health sector is not an exception. Companies are investing in AI-assisting medical tools which would be used to enhance several medical processes. AI models require vast amounts of data to improve their accuracy, which has led to the practice of medical image sharing. Elon Musk recently urged users on X to upload medical imagery to Grok (his platform’s AI chatbot), claiming it would help train the AI to read scans and generate AI medical data more precisely.

Over the weeks that have followed, users on X have been submitting X-rays, MRIs, CT scans and other medical images to Grok, requesting for diagnoses. With the rising need for quick medical answers to complex medical questions, many users are drawn to the solution AI platforms are providing. While the technology promises ground breaking assistance for doctors and patients, many security experts are concerned about the possible violation of user privacy and ethical concerns.

One of the major concerns about this process is that unlike traditional healthcare providers (hospitals, health insurers and doctors) who are under strict regulations by many bodies such as Health Insurance Portability and Accountability Act (HIPAA), social media platforms and AI chatbots do not have health care privacy regulations. This means the storage, sharing, and usage of sensitive medical information remain dangerously uncertain.

Uploading sensitive medical information to AI platforms creates privacy risks. These images could potentially be used to train future AI models, shared with unknown third-party vendors, or accidentally exposed in public datasets. Many companies’ privacy policies such as Grok’s do not detail how user data will be used and reserve the right to change the policies without user consent.

Security professionals have also discovered private medical records in AI training datasets. It implies that our personal health information could be viewed, analyzed, or misused by anyone with access to these dataset. Relying on AI for medical advice isn’t just a privacy issue. It exposes victims to risk of misdiagnosis and overreliance on algorithms that do not have the sound medical judgement of trained healthcare professionals.

Some individuals might feel they are contributing to technological advancement by sharing their medical image. Researchers call this “information altruism” – the belief that sharing data serves a greater good. However, experts caution that the risks outweigh potential benefits. AI models are far from being perfect and are vulnerable to AI hallucination and other cyber attacks that could lead to exposure of sensitive medical data.

As generative AI’s role in medical advice expands, individuals are repeatedly warned about the risks of uploading any medical images to AI platforms. Healthcare providers and technology companies must prioritize robust privacy safeguards and abide by the standards set by regulatory bodies.