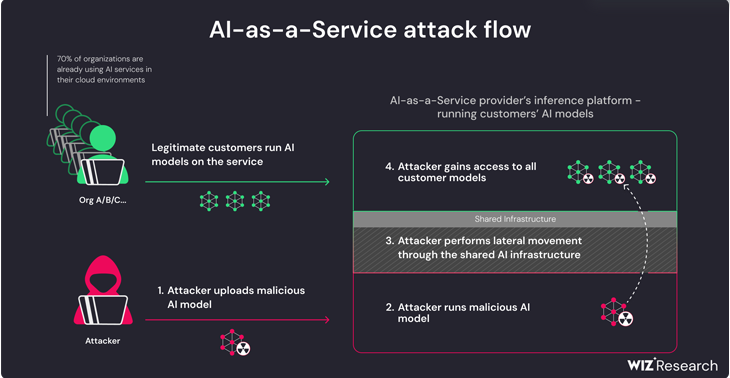

Recent research has uncovered critical vulnerabilities affecting artificial intelligence (AI)-as-a-service providers like Hugging Face. These vulnerabilities pose significant risks, potentially allowing threat actors to escalate privileges, access other customers’ models, and compromise CI/CD pipelines.

What is AI-as-a-Service (AIaaS)?

AI-as-a-Service (AIaaS) is a concept where artificial intelligence (AI) capabilities are provided to users as a service over the internet. It’s like renting AI tools and technologies instead of buying and maintaining them yourself. Just as you subscribe to a streaming service to access movies and TV shows without owning them, AIaaS allows you to access AI tools, algorithms, and computing power without building or managing them on your own servers. This can include services like natural language processing, image recognition, predictive analytics, and more, all delivered over the Internet on a pay-as-you-go basis.

Who is Hugging Face?

Hugging Face offers an AIaaS platform focused on natural language processing (NLP). Their services include pre-trained models for tasks like text classification, language translation, and question-answering, as well as tools for building custom NLP models.

The Identified Vulnerability

Wiz researchers looked into Hugging Face’s infrastructure and methods of weaponizing the defects they found. They found that anyone could simply upload an AI/ML model, even one that was based on the Pickle format, to the site.

What is a Pickle Format?

Python programming language uses Pickle file format to serialize and deserialize objects. In AI, it employs Pickle to save trained machine-learning models. Allowing untrusted models in pickle format to be uploaded to the service means that attackers could upload malicious models. These models could then be deployed and run on the shared infrastructure. This poses a risk because these models could potentially compromise the security of the system.

The potential risks involved:

Shared Inference Infrastructure Takeover: Attackers can gain control over the computing resources (like servers or processors) shared among multiple AI service users. If an attacker takes over this infrastructure, they could potentially manipulate or interfere with its AI models. This is particularly concerning because it means that an attacker could influence the behavior of multiple AI models at once. This possibly leads to widespread security issues or data breaches.

Shared CI/CD Takeover: CI/CD pipelines are the automated processes used to build, test, and deploy software applications, including AI models. If attackers gain control over the CI/CD pipeline of the AI service, they could inject malicious code. They can also tamper with the deployment process. This allows deployment of compromised or malicious AI models to production environments, posing a significant security risk.

Furthermore, this vulnerability allows attackers to take over the CI/CD pipeline to perform a supply chain attack.

What is a Supply Chain Attack?

A supply chain attack involves targeting the process or tools used to develop, test, and deploy software. In this case, taking over the CI/CD pipeline could allow attackers to inject malicious code into the deployment process, leading to compromised or malicious AI models being deployed to production environments. This could have consequences, as the compromised models could affect the security and integrity of systems that rely on them for decision-making or other tasks.

What is the way forward?

To identify and reduce any threats to the platform going forward, Hugging Face claims to have successfully reduced the risks identified by Wiz. They established a cloud security posture management solution, vulnerability scanning. Hugging Face also implemented yearly penetration testing activity.

Hugging Face recommended customers switch to Safetensors, a format the company developed to store tensors, instead of Pickle files, which by design have security flaws.