Patronus AI, a San Francisco startup company in the AI safety and evaluation space, has completed its $17 million Series A funding round. Led by Notable Capital (formerly GGV Capital), the round saw significant participation from Lightspeed Venture Partners, Datadog, and prominent tech executives like Gokul Rajaram and Jonathan Frankle. This funding aims to boost Patronus AI’s mission to ensure the safe and effective deployment of generative AI models in enterprise settings.

Credit: Patronus AI

The fast adoption of generative AI models, such as OpenAI’s GPT-4o has brought to light several challenges, which led Patronus AI to develop a first-of-its-kind automated evaluation platform designed to detect critical issues in AI outputs, such as hallucinations, copyright infringements, and safety violations. Their platform tests AI models, ask tricky questions, and gives detailed reports to ensure they perform well and don’t make surprising mistakes, reducing the manual effort required by most enterprises today. Since its launch in September 2023, Patronus AI’s tools have been widely adopted and praised for their efficacy.

Funding Details and Purpose

The $17 million Series A funding round was spearheaded by Glen Solomon at Notable Capital who will be joining Patronus AI’s board. Glenn is a brilliant investor who has supported legendary companies over the years, like Hashicorp, Vercel, Square, Airbnb, Slack, Opendoor, Monte Carlo, Zendesk, and more. With contributions from Lightspeed Venture Partners, Datadog, Factorial Capital, and several influential tech leaders. The newly secured capital will enable Patronus AI to expand its operations, enhance its automated evaluation platform, and accelerate the adoption of AI safety measures across various industries. To address the growing global demand, Patronus AI plans to establish new offices and increase its presence in key markets worldwide.

Innovative Solutions for AI Safety

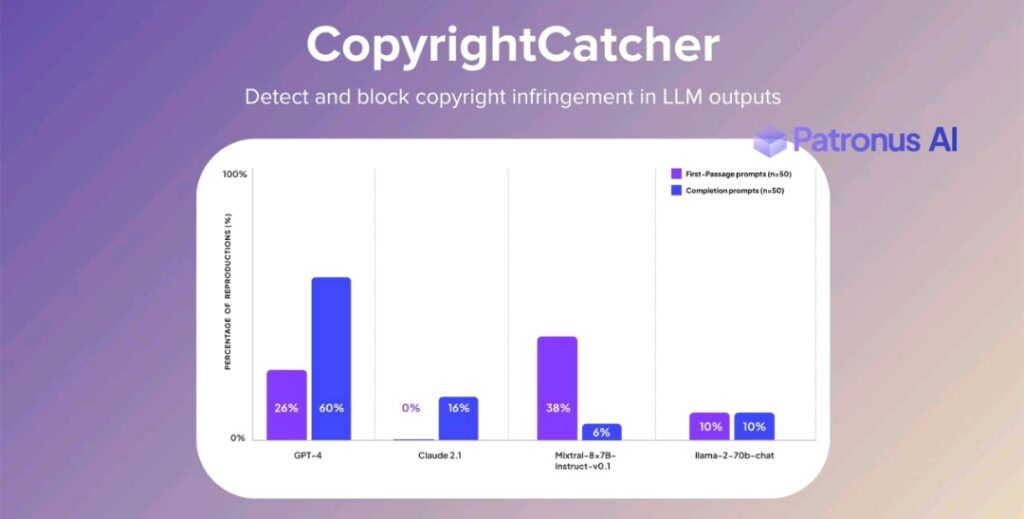

Patronus AI’s innovative tools, such as FinanceBench and CopyrightCatcher, have set new standards in AI evaluation. FinanceBench provides a standardized benchmark for assessing large language models (LLMs) in the financial domain, while CopyrightCatcher detects copyright violations in LLM outputs. These tools have been instrumental in helping Fortune 500 companies and leading AI firms improve the accuracy and reliability of their AI models.

Credit: Sahar Mor

“Measuring LLM performance in an automated way is really difficult and that’s just because there’s such a wide space of behavior, given that these models are generative by nature,” explained Anand Kannappan, Co-Founder of Patronus AI. “But through a research-driven approach, we’re able to catch mistakes in a very reliable and scalable way that manual testing fundamentally cannot.” Patronus AI’s platform has processed millions of requests, detecting hundreds of thousands of errors in both offline and online settings.

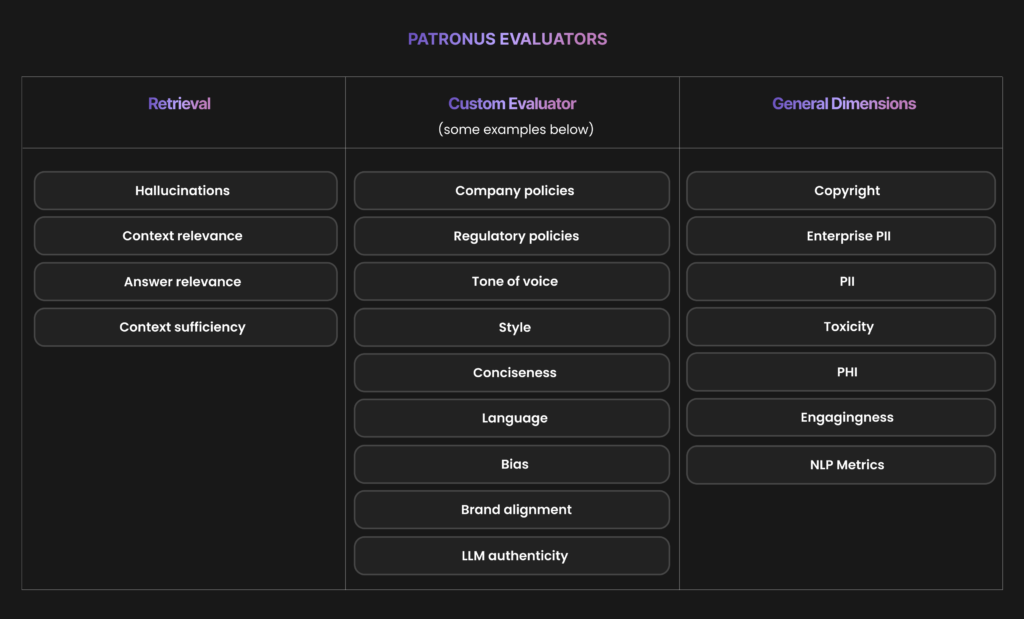

With the rise of generative AI applications, thorough evaluation has become important. High-profile failures, such as Air Canada’s misleading AI assistant and Chevrolet’s chatbot mishap, highlight the potential risks of deploying untested AI models. Patronus AI’s platform mitigates these risks by offering an end-to-end solution for evaluating AI systems regardless of their purpose or underlying model. Core to their platform is the set of Patronus evaluator models customers can use to assess performance in a broad range of categories, ranging from accuracy and hallucination to brand alignment and Personal Identifiable Information (PII) leakage with just one line of code.

Credit: Patronus AI

Future Vision and Expansion

Looking ahead, Patronus AI plans to continue its innovative work in AI evaluation. The company is focused on developing state-of-the-art models for evaluation, introducing new AI-powered features for automated testing, and expanding its research and development efforts. By advancing the state-of-the-art in AI evaluation, Patronus AI aims to set new standards in AI safety and reliability, helping enterprises deploy AI models with greater confidence and security.