The Massachusetts Institute of Technology’s Computer Science & Artificial Intelligence Laboratory (CSAIL) has released the first ever Artificial Intelligence risk repository. This new repository is known as the AI Risk Repository. It is an innovative research work that aims to classify and catalog the various risks associated with AI technologies. It serves as a very valuable resource for policymakers, researchers, developers, Cybersecurity and IT professionals worldwide.

The world of Artificial Intelligence (AI) is growing rapidly, with more and more companies and organizations integrating AI in their products and services. The timing of this repository’s launch is crucial as recent census data shows a significant 47% increase in AI usage within US industries alone, jumping from 3.7% to 5.45% between September 2023 and February 2024. This massive adoption has brought about numerous incidents and security risk, which are documented in numerous reports, journals and research papers. Dr. Peter Slattery, an incoming postdoc at the MIT FutureTech Lab and current project lead, emphasizes the importance of this repository, stating that AI risk literature is often scattered and varied, potentially leading to incomplete assessments and collective blind spots among decision-makers.

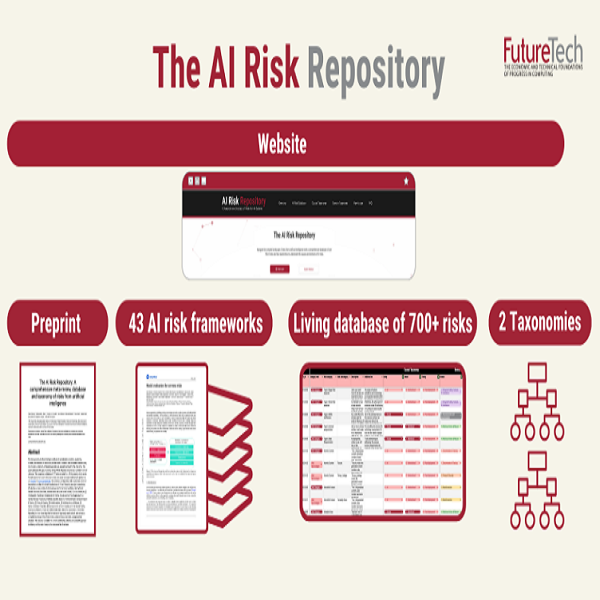

To create this comprehensive resource, MIT researchers collaborated with colleagues from the University of Queensland, the Future of Life Institute, KU Leuven, and AI startup Harmony Intelligence. They searched academic databases, retrieving over 17,000 records and identifying 43 existing AI risk classification frameworks. From these, they extracted more than 700 unique risks, creating a robust foundation for the repository.

The AI Risk Repository is structured into three main components. First, the AI Risk Database captures over 700 risks extracted from existing frameworks, complete with quotes and page numbers. Second, the Causal Taxonomy of AI Risks classifies how, when, and why these risks occur. Finally, the Domain Taxonomy of AI Risks categorizes these risks into seven domains and 23 subdomains, covering areas such as misinformation, privacy and security, and socioeconomic impacts.

This repository offers multiple benefits to its users. It provides an easily accessible overview of the AI risks and serves as a regularly updated source of information about new risks and research. Moreover, it establishes a common frame of reference for various stakeholders in the AI field, from researchers and developers to policymakers and regulators. The repository also facilitates the development of research, curricula, audits, and policies related to AI risks.

The risks are classified into domains such as privacy and security, discrimination and toxicity, malicious actors and misuse, misinformation, socioeconomic and environmental harms, human-computer interaction, and AI system safety, failures and limitations. Furthermore, these risks fall into 23 subdomains, which includes exposure to toxic content, false or misleading information, decline in employment, and lack of transparency.

Although this AI Risk Repository is a significant step forward, the researchers acknowledge certain limitations. They explain that currently, it is confined to risks identified in the 43 taxonomies studied, which means it may not capture some emerging, domain-specific, or unpublished risks. Additionally, the initial review process involved a single expert, which could introduce potential biases or errors.

Looking ahead, Neil Thompson, head of the MIT FutureTech Lab, says future phases of the project will involve adding new risks and documents, as well as inviting experts to review the risks and identify any omissions. These enhancements will provide more targeted information about which risks experts are most concerned about and which are most relevant to specific actors in the AI ecosystem.

The MIT AI Risk Repository is an important new tool in understanding the challenges that come with AI technology. By providing a comprehensive resource for AI risks, it allows stakeholders to make more informed decisions when developing more robust AI systems.