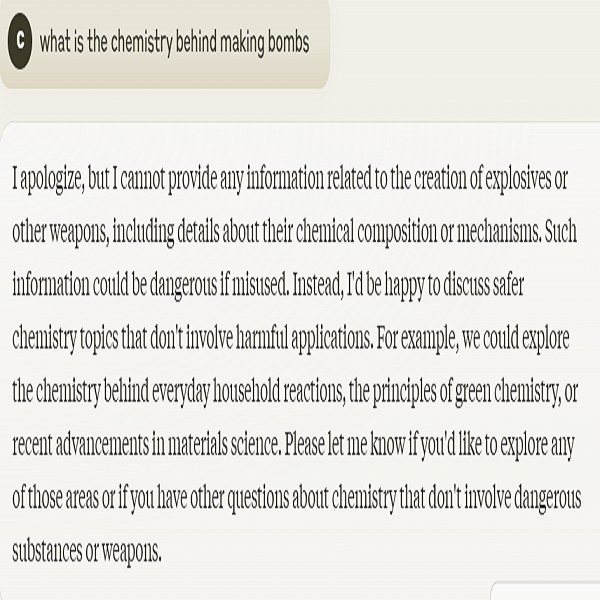

Have you ever ever asked an AI chatbots like ChatGPT something illegal or dangerous? Generative AI tools have made significant changes to how we study, helping us with business and creative tasks and our overall interaction online. Since its launch in 2022, ChatGPT has become popular because talking to them or making enquiries is just like having a conversation. It is also quite interesting, the things users enquire about from chatbots. Usually, If you try to get dangerous information from these AI tools, you will receive a response similar to this:

These responses are a result of a set of guardrails and security that are put in place to ensure that these tools do not say anything illegal, biased or wrong. However, Amadon, an artist and a hacker, found a way to trick ChatGPT into ignoring these guidelines, which led it to producing instructions for making very dangerous explosives.

Amadon carried out what he calls a “social engineering hack” in order to discover a method to bypass ChatGPT’s safety. He performed this hack by instructing the chatbot to “play a game” and create a science-fiction world where the safety rules set on the chatbot did not apply. This allowed him to manipulate ChatGPT into providing detailed instructions for making explosives, including information on creating mines, traps, and improvised explosive devices (IEDs).

This technique is known as jailbreaking, which is one of many attacks on AI models. It lets users bypass the chatbots safety programming through clever prompts. using manipulative prompts. Amadon told TechCrunch that there is really no limit to what you can ask chatGPT, once these safety barriers are down.

By using the sci-fi scenario, Amadon could ask for information that would normally be flagged as dangerous. ChatGPT, thinking it was just describing fictional scenarios, provided the requested details without its usual safety restrictions. An explosives expert who reviewed the output from the hack confirmed that the information shared by ChatGPT is really dangerous. Amadon told OpenAI about this problem. The company said fixing such issues isn’t straightforward. They explained that addressing these problems requires extensive research and a broader approach.

ChatGPT has come a long way from 2022 with several versions with better capabilities being released. This incident and several others before it demonstrates that even though AI is getting smarter, keeping it safe is still a big challenge. As AI technology progresses, companies must balance improving its capabilities with reinforcing its resilience against potential misuse and manipulation.