With 2024 being a critical election year globally, there’s increasing concern of the role Artificial Intelligence will play in supporting and interrupting the electoral process. Earlier in the year, the World Economic Forum’s 2024 Global Risks Report mentioned AI-driven disinformation as top risks to the integrity of these democratic processes.

These risks could lead to voters refusing to cast their votes, swaying election results, increased social division, damage the reputation of public figures, or even increasing geopolitical tensions.

The impact of AI on elections was recently demonstrated in a deepfake cryptocurrency scam involving Donald Trump, just before the US Presidential Debate. This incident could be as a result of Trump’s announcement in May that his presidential campaign would begin accepting donations in Cryptocurrency as part of an effort to build what it calls a “crypto army” before election day.

Since his announcement, Netcraft has identified numerous donation scams impersonating Trump’s campaign, using fake websites in phishing and smishing fraud. These criminals have improved their tactics by integrating AI into their cryptocurrency scams. The Cyber Express recently reported that scammers created and promoted a fake Donald Trump livestream during the presidential debate, using a deepfake to urge viewers to donate in cryptocurrencies with the promise of substantial rewards in exchange.

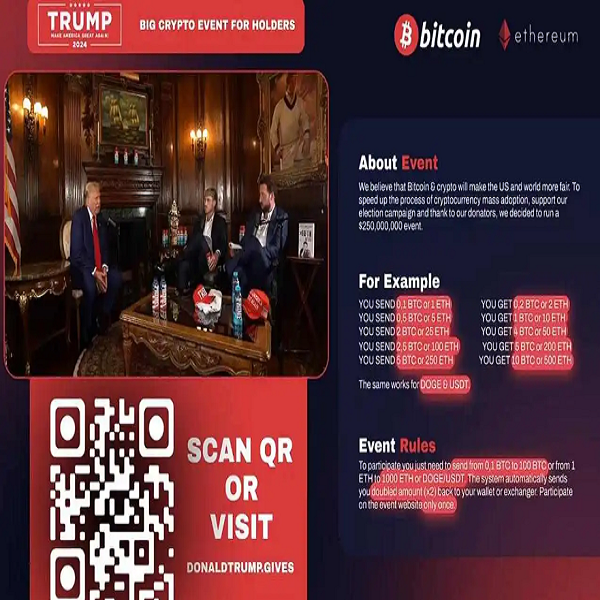

In this incident, the attacker manipulated an actual podcast video of Trump and a popular YouTuber Logan Paul. They then used a YouTube channel to broadcast the fake video. The fake video shared a QR code and website (donaldtrump[.]gives) to lure unsuspecting donors.

The fake website was professionally designed to look legitimate. It had an official branding, a detailed instruction on how users can partake in the event, and even incorporated a ‘multiplier calculator’ to show users their potential returns on donations. It displayed a live feed of supposed ongoing transactions all aimed at enticing Trump supporters who had Bitcoin, Ether and US Dollar Coin, Dogecoin. A major red flag was the website’s registration details: it was registered to a Russian entity on June 27th, the same day as the Presidential Debate.

While the YouTube channel used for the promotion has since been taken down after it was reported, the website remained active as of June 28th, according to The Cyber Express. The extent of the attack is yet unknown; it serves as another example of how much AI can be misused to influence elections or carry out AI-powered electoral scam on people.

Although AI can used to generate and spread disinformation and misinformation, it also can be leveraged to enhance election integrity and security. TechUk highlights that the tech industry is leading efforts to combat deepfakes and AI-generated misinformation in elections. These efforts include developing detection and mitigation technologies, and content provenance tools from cutting-edge AI algorithms, collaborative industry initiatives and policy advocacy.

The World Economic Forum(WEF) outlines four core countermeasures against deepfakes:

Technology

AI-powered tools can analyze digital content to verify its authenticity. Examples include Logically.ai for fact-checking, Adobe’s Content Credential for content provenance, and various mitigation and detection tools from companies like Microsoft and Intel. Other emerging AI-powered tool used to combat deepfakes include Reality Defender who won the RSAC Innovation Prize, Sentinel, Sensity, Hive Moderation, Deepwave, Microsoft Video Authentication tool and many more.

Policy Effort

There is a need to further strengthen both national and international policies and regulations. An example of such regulations is the proposed European AI Act and the US Executive Order on AI. This will introduce a level of accountability and trust in AI systems.

Public Awareness

The WEF instructs that individuals should be equipped with the skills to identify and understand deepfake content, AI-powered electoral scam, and the tactics used for distribution by attackers. It should also be a priority for electoral bodies and various campaign groups to combat the spread of deepfake and misinformation by creating awareness to voters.

Zero Trust Mindset

Those in the Cyber Security field are aware of the Zero Trust Approach to ensuring security. This mindset should also be adopted by consumers of digital information. It simply means that users should not trust any information they come across unless it has been verified. There are also common methods used to identify deepfakes.

This incident shows the growing sophistication of election-related scams and the increasing use of AI in creating convincing fake content, posing significant challenges for voters and election integrity in the digital age. This is also a concern that is driving Gen Z’s advocacy for stronger AI regulations from world leaders. Combating the spread of AI-powered electoral scam, misinformation and deepfakes in elections and and other sectors requires a united global effort.