Cybercriminals are finding innovative ways to exploit AI, and one of the latest trends is the use of AI-generated “white pages” in malvertising campaigns. These decoy pages, designed to look legitimate, are fooling detection engines and placing malicious ads high in search results. A clear distinction between these “white pages” and the “black pages” reveals how attackers layer their tactics to bypass detection.

“White pages” refer to legitimate-looking decoy websites or ads that mask malicious intentions. These are in contrast to “black pages,” which are the actual malicious landing pages designed to steal credentials or deploy malware.

AI is making it easier than ever for attackers to create convincing white pages. By generating unique and visually appealing content, including text and images, criminals are able to bypass traditional detection systems that rely on scanning for known patterns or malicious code. These pages are so convincing that even platforms like Google Ads’ validation systems are fooled.

Some examples of AI-generated white pages in action are:

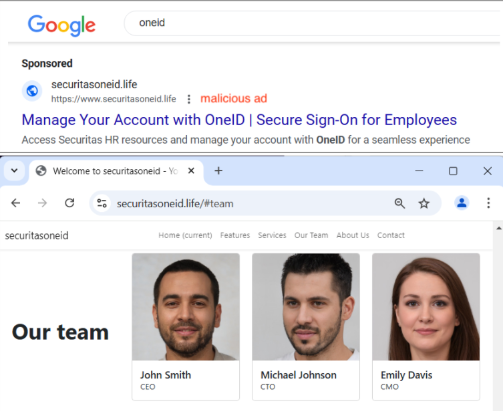

Securitas OneID Phishing Campaign: In this campaign, threat actors targeted users searching for the Securitas OneID mobile app. They purchased Google Search ads that redirected users to an AI-generated decoy site. The site featured profiles of fake executives created with AI-generated images, making it appear authentic.

While a human observer might recognize discrepancies in the content, automated systems analyzing the site’s code found nothing malicious. This allowed the ad to pass validation and appear prominently in search results.

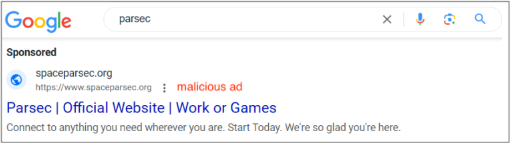

Parsec Star Wars-Themed Campaign: Another example involved the Parsec remote desktop app, popular among gamers. The attackers created a white page filled with Star Wars-themed imagery and content, playing on the dual meaning of “parsec” as both a unit of astronomical measurement and a term from the Star Wars universe.

The site included AI-generated posters and graphics that were visually impressive but completely irrelevant to the legitimate Parsec app. While humans might find the site comical or nonsensical, detection engines deemed it harmless, allowing the malicious campaign to proceed.

Implications for Cybersecurity

AI-generated white pages present significant challenges for cybersecurity defenses:

- Traditional detection systems focus on identifying known malicious patterns. AI-generated content, with its unique and seemingly innocuous appearance, evades these systems effectively.

- AI enables attackers to produce high-quality, original content at scale, making their campaigns more convincing.

- Many of these decoy pages can be easily identified by humans as fake or irrelevant. However, relying solely on human review is neither scalable nor practical for platforms managing millions of ads daily.

Mitigation Techniques:

- Implement stricter validation processes on ad platforms like Google Ads:

- Cross-check ad content with known legitimate sources.

- Use AI to identify patterns indicative of cloaking or deception.

- Verify website URLs and avoid clicking on ads that seem unusual or out of place.

- Provide training on recognizing common phishing tactics.

In conclusion, AI-generated malvertising white pages highlight the dual-edged nature of AI in cybersecurity. While AI offers powerful tools for detection and defense, it also provides criminals with new methods to evade security measures. To combat this threat, organizations must adopt advanced detection technologies, enhance validation processes, and foster a culture of vigilance and collaboration.