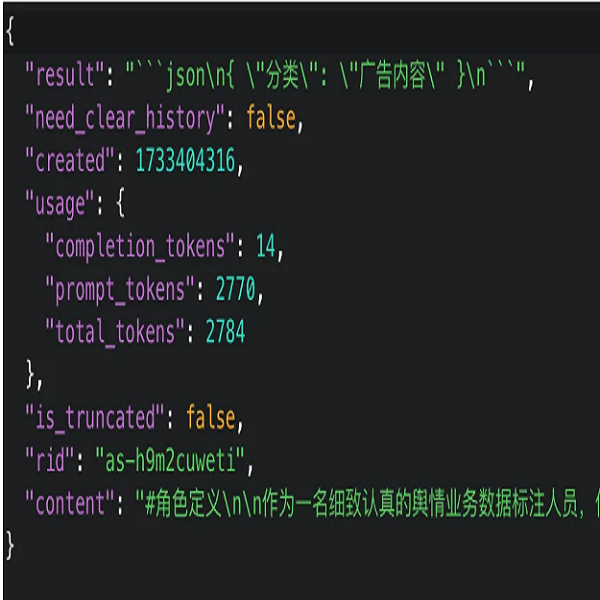

Security researcher NetAskari has discovered an exposed dataset that reveals an AI-powered system designed by China to censor and filter public content. This exposed dataset was hosted on an unsecured Elasticsearch database on a Baidu server.

The database contains entries up to December 2024 and was found to be completely unsecured, potentially allowing unauthorized access to its contents. The discovered AI system represents a significant advancement in content filtering technologies. The technological infrastructure demonstrates a sophisticated approach to narrative control, enabling the system to identify and filter even the most subtle forms of criticism.

According to expert analysis, this approach differs from traditional censorship mechanisms. As one researcher noted, “Unlike traditional censorship methods that rely on human labor for keyword-based filtering and manual review, a Large Language Model (LLM) trained on such instructions would significantly improve the efficiency and granularity of state-led information control.”

From a cybersecurity perspective, the system presents multiple areas of concern. It demonstrates the potential for automated large-scale content manipulation and raises significant concerns about digital freedom and information control. These AI-powered content filtering technologies could potentially be used to suppress alternative narratives and maintain strict control over digital communication channels.

This discovery demonstrates the rapidly advancing capabilities of AI in managing and potentially manipulating digital communication. However, similar AI-powered content filtering technologies could be used to identify potential cybersecurity threats embedded in text, detect subtle patterns of malicious communication and provide early warning systems for emerging digital risks.