CVE-2024-5208 is a significant vulnerability in the “anything-llm”, an integral tool for creating and managing AI infrastructure by mintplex-labs. This flaw allows attackers to cause a denial of service (DoS) by exploiting the upload-link endpoint, which can be particularly disruptive for systems relying on AI.

What’s the Problem?

The vulnerability involves uncontrolled resource consumption. An attacker can exploit it by:

- An Empty Body Attack. Typically, this involves sending a request with an empty body but a ‘Content-Length: 0’ header.

- Fake Content-Length involves sending a request with random content and a misleading ‘Content-Length’ header.

Furthermore, both methods can force the server to shut down, leading to a DoS. The attacker needs at least a ‘Manager’ role to carry out this attack, which means internal threats or compromised accounts are potential vectors.

AI systems, especially those using language models (LLMs), depend heavily on their underlying infrastructure. A vulnerability like CVE-2024-5208 can disrupt the entire AI operation by taking down the server, thereby rendering the AI services unavailable. This is particularly critical for applications that need high availability and reliability.

Technical Breakdown

- Affected Versions: Anything-llm versions before 1.0.0.

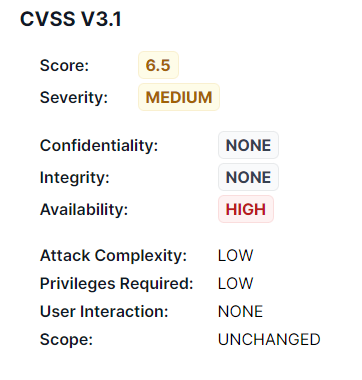

- Severity: Medium, with a CVSS score of 6.5.

- Impact: High on availability (causing DoS), none on confidentiality or integrity.

- Attack Vector: Network-based attack that requires low privileges and no user interaction.

Imagine an AI customer support system that uses “anything-llm” to process language queries. If an attacker exploits CVE-2024-5208, the entire system could be taken offline, preventing it from handling customer queries and potentially causing significant business disruptions.

What Can You Do?

To mitigate this vulnerability:

- Ensure your “anything-llm” application is updated to the latest version that addresses this issue.

- Improve the validation of incoming requests to prevent malicious inputs from causing harm.

- Set up monitoring to detect unusual activity patterns that might indicate an ongoing attack.

- Lastly, limit the ‘Manager’ role to trusted individuals to minimize the risk of exploitation.

In conclusion, CVE-2024-5208 is a reminder that securing AI systems involves more than just protecting the AI models. Safeguarding the infrastructure that supports these models against vulnerabilities that can disrupt AI services is equally important.