What happens when you combine smart glasses, facial recognition technology and secret code?

In a recent demonstration of how AI technology can pose unexpected security risks, two Harvard students designed a project that raises concern about privacy. AnhPhu Nguyen and Caine Ardayfio, both juniors studying Human Augmentation and Physics respectively, built a system they called I-XRAY.

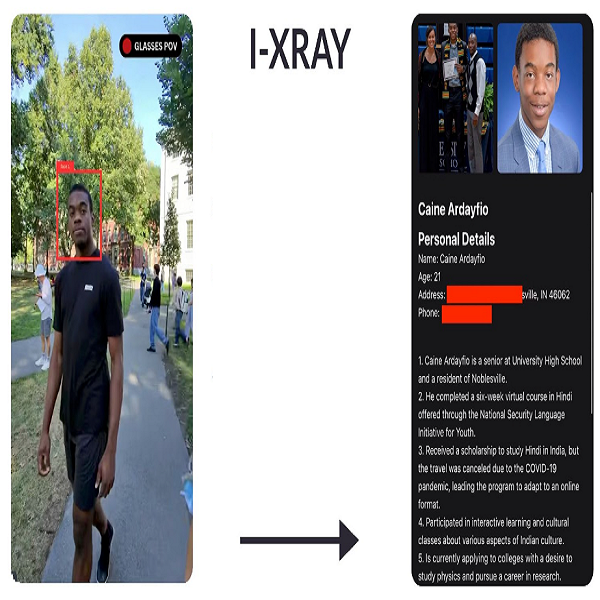

I-XRAY was built from a combination of Ray Ban Meta Smart Glasses, facial recognition technology and customized software that instantly searches and finds people’s identities, phone numbers, and addresses in real-time. They shared a video on X, that explained their project.

The system works by capturing video from the smart glasses. A bot then takes this video and tries to detect a face in it. Once a face is detected, it then runs the images through a reverse image search tool called PimEyes. This search tool finds images online, similar to the one being searched. Then, an AI is used to determine a person’s name from the results of the image search. Once they get a name, it is used to search online articles and voter registration databases to find other personal details. Using a Large Language Model (LLM), the data is compiled within a minute in an app they created.

In their demonstration, Nguyen, Ardayfio and other students behind the project were able to identify several classmates and confirmed that information such as their addresses, names of relatives were mostly accurate. Taking this a step further, they engaged in conversations with complete strangers in public places, pretending to know them based on the information gathered by their system. “Using our glasses, we were able to identify dozens of people, including Harvard students, without them ever knowing,” said Ardayfio.

Advancement in LLMs and its integration with facial recognition technology is what makes I-XRAY fully automatic and able to carry out real-time data extraction. Nguyen said that most people didn’t even know that these tools could exist where one could just find someone’s home address from their name, so people were rightfully scared.

The students emphasize that their goal is not to misuse this technology but to raise awareness about the current capabilities of smart devices and AI when combined with publicly available data. They don’t intend on releasing their code because they are aware of the possible misuse. Nguyen suggests there could be positive applications for this technology, such as in networking situations or assisting individuals with memory loss.

The primary purpose of their project is to demonstrate the need for better privacy protections. They offered a helpful guide for users to Remove personal information from reverse face search engines like PimEyes and Facecheck.id, as well as from people search engines that compile data from public records. Additionally, they advise taking steps to prevent identity theft, such as adding two-factor authentication to important accounts and freezing credit reports.

It is important to understand that these tools already exist and it’s a matter of time before cyber criminals are able to replicate such a system and use it for malicious purposes. As AI advances, there will be an increasing development of sophisticated tools which could potentially increase security risk, unless more measures are put in place to curb this challenge.