Microsoft has recently unveiled PyRIT, an open-source automation framework aimed at simplifying the testing of AI tools. PyRIT is specifically crafted to identify vulnerabilities in generative AI tools, aiming to enhance the security and dependability of AI systems.

PyRIT stands for Python Risk Identification Tool. It is a proactive way to identify potential risks associated with generative AI systems.

Ram Shankar Siva Kumar, the AI red team lead at Microsoft, draws attention to the value of PyRIT in helping businesses deal with the challenges in assessing AI risk. He highlights how the tool can be used to evaluate how resistant large language models (LLMs) are against several forms of damage, such as fabrication (like hallucinations), abuse (like bias), and forbidden material (like harassment).

Why should AI Tools be tested?

AI tools should be tested to ensure they are safe and reliable. With tools like PyRIT, we can identify risks in AI systems, like inaccuracies or behaving unfairly, and fix them before they affect the privacy of people.

“Red teaming” an AI tool means testing it to find weaknesses or problems. It is playing the role of a cyberattacker to see where the security is weak. With AI tools, red teaming involves trying different ways to trick or break the tool to see if it behaves safely and correctly. This helps improve the tool’s security and reliability by finding and fixing any issues before they can be exploited by cyber attackers.

Challenges in Testing AI Tools

Testing AI tools is not very straightforward, especially when they create things text or images. Microsoft has learned that it’s different from testing traditional software. There are three main challenges:

Finding Different Types of Problems:

AI systems can create content that’s unfair or inaccurate. So, when testing them, you need to look for both security problems and issues with fairness and accuracy.

AI is Not Always Predictable:

Unlike regular software, AI can give different answers even with the same input. This makes it harder to test because you can’t always know what it will do.

AI Systems Come in Different Shapes:

AI systems can be used in many ways, like standalone programs or parts of other software. This makes testing them even harder because each one is different.

Why Automation Helps

While it’s important to check AI Tools manually, it takes a lot of time. That’s where PyRIT comes in. It helps by automating some tasks and finding areas that need extra attention.

PyRIT started as a simple set of scripts but has grown into a powerful tool. Microsoft’s AI Red Team uses it to test AI tools quickly and efficiently.

Cybersecurity in view: PyRIT’s Five Interfaces

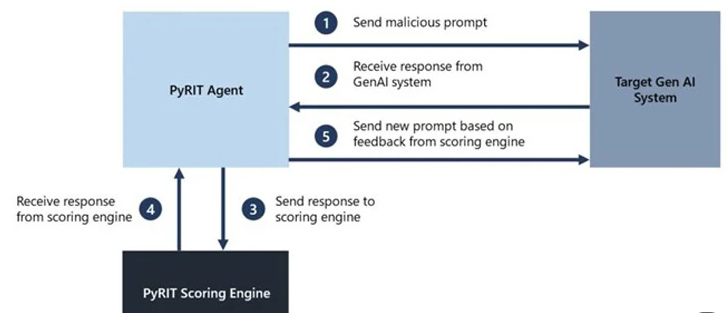

The five interfaces that makeup PyRIT’s core are each intended to make the process of identifying risks easier. They include;

Targets: These are the AI tools that PyRIT tests. It can test text-based systems and can work with different AI platforms.

Datasets: These are the harmful prompts PyRIT uses to test the AI tools.

Scoring Engine: This decides if the AI tool’s response is good or bad.

Attack Strategies: These are the different ways PyRIT tests the AI Tool.

Memory: This stores what PyRIT finds during testing for later analysis.

This extensive functionality speaks to PyRIT’s ability to handle a wide range of AI risk scenarios. Moreover, PyRIT can do more than just detect traditional AI threats. It can also identify privacy issues like identity theft and security risks like virus production.

Balancing Automation and Human Expertise:

Microsoft notes that while PyRIT speeds up the risk assessment process, manual red teaming of generative AI systems is still necessary. Instead, it enhances human expertise by highlighting specific pointers of risk and encouraging additional research.

Conclusion:

PyRIT’s release aligns with increasing concerns about security flaws in AI tools. With PyRIT’s launch, the AI tools will be better equipped to withstand new threats and promote ethical development.

PyRIT introduces a new era of proactive AI governance by equipping organizations with a thorough risk assessment methodology, reducing risks, and encouraging confidence in artificial intelligence (AI) tools.