Researchers at Barracuda have recently discovered a new large-scale phishing attack, where threat actors are pretending to be OpenAI. As generative AI technology continues to advance, cyber criminals are using them as a tool to make their fake emails sound more professional and convincing.

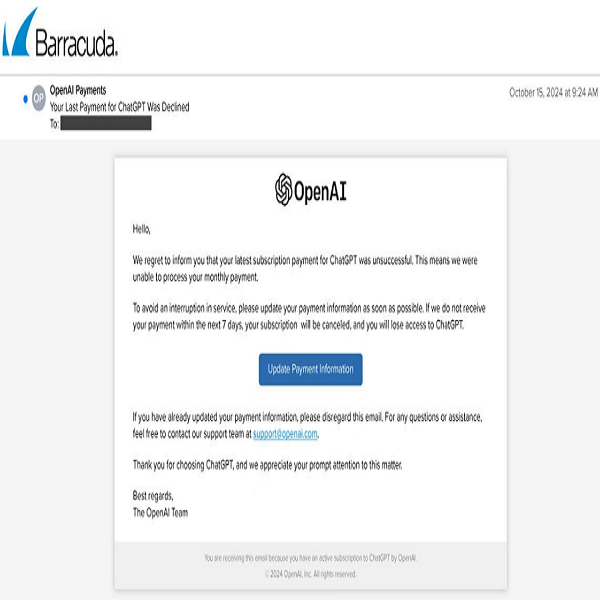

In this research, the attackers are impersonating OpenAI, sent fake emails to thousands of businesses worldwide. They claimed to be from OpenAI’s payment department, and were urgently requesting that users update their payment information to successfully carry out their monthly subscription on ChatGPT. The attackers sent over 1,000 emails from a single suspicious domain in an attempt to look like official OpenAI messages.

The security team at Barracuda, who discovered this scam, found several obvious red flags.

1. Sender’s email address: The email is from info@mta.topmarinelogistics.com, which does not match the official OpenAI domain (e.g., @openai.com).

2. DKIM and SPF records: The email passed DKIM and SPF checks, which means that the email was sent from a server authorized to send emails on behalf of the domain. However, the domain itself is suspicious.

3. Content and language: The language used in the email has a sense of urgency, which is typical of phishing attacks.

4. Contact information: The email provides a recognizable support email (support@openai.com), to make it look a bit more legitimate. However, the overall context and sender’s address proves otherwise.

Since the launch of ChatGPT, it has become easier and faster for cyber criminals to write convincing emails. However, they are still using the same basic tricks, like pretending to be well-known companies or brands. According to Verizon’s 2024 Data Breach Investigation report, AI was actually used in very few cyber attacks last year. However, security experts warn that this could change quickly as AI technology improves.

While AI-powered attacks aren’t revolutionary yet, security experts warn that this could change soon, making it crucial for companies to strengthen their defenses now rather than waiting for more sophisticated threats to emerge. To stay safe from these evolving threats, organizations’ need to use enhanced email security tools that can spot and block AI-generated attacks. With humans being the weakest link, they need to regularly train employees to recognize suspicious emails. Companies should leverage AI to automate their incident response plan. By doing so, they can quickly deal with Phishing attacks.