It is quite sad and disheartening that Artificial Intelligence (AI) is being misused by criminals to create illegal content involving Child Sexual Abuse Material (CSAM). In December last year, it was reported that hundreds of such illegal images were linked to a dataset used for training AI models.

During their investigation, Stanford Internet Observatory (SIO) found that a dataset containing billions of explicit images was used to train a popular AI image generation model. This dataset, called LAION-5B, which was used by Stability AI to create Stable Diffusion, included at least 1,679 illegal images scraped from social media posts and adult websites.

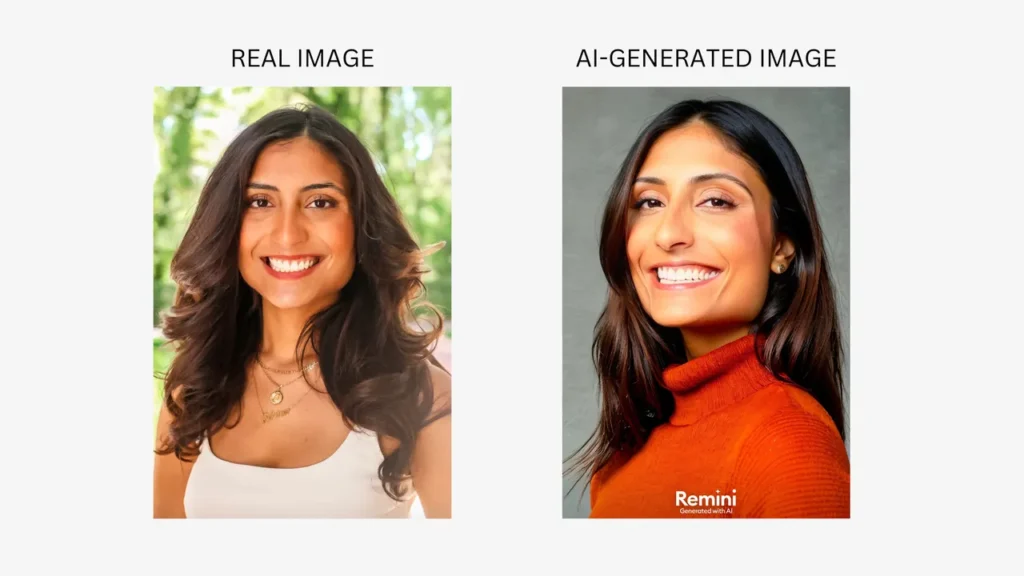

An AI image generator is a tool that creates images based on text prompts or other types of inputs. You may have come across such trendy apps that use AI to generate images of people, such as aging photos or portraits.

Unfortunately, as reported by SIO and Thorn, the rapid advancement in AI has made it possible to create realistic images that promote the sexual exploitation of children using open-source AI image generation models. Thorn explained that bad actors can take any publicly available images of children and then create AI-generated CSAM with their likeness. These images can then be used for grooming or sextortion. This makes the fight against child abuse and pornography even more challenging.

There has been a public outcry for tech companies to take stronger actions to protect children and for lawmakers to introduce stricter measures to address this growing issue. Recently, these concerns were raised at the Child Safety Hearing with the CEOs of META, Snap, TikTok, Discord, and X (formerly Twitter).

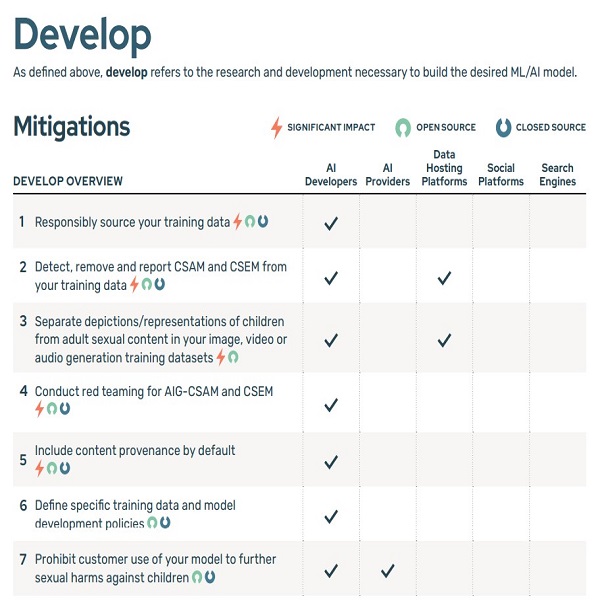

To combat the spread of CSAM, top AI developers, including Google, Meta, and OpenAI, have pledged to implement safeguards around this emerging technology. This unified front was formed by two non-profit organizations: Thorn, a children’s tech group founded in 2012 by actors Demi Moore and Ashton Kutcher, and All Tech is Human, based in New York.

Thorn’s report on “Safety by Design for Generative AI: Preventing Child Sexual Abuse” outlines principles that AI developers will follow to prevent their technology from being used to create child pornography. These principles include responsibly sourcing training datasets, incorporating feedback loops and stress-testing strategies, employing content history or “provenance” to detect misuse, and responsibly hosting their respective AI models.

The battle against CSAM is a continuous process that requires worldwide collaboration. By leveraging the potential of AI responsibly, we can create a digital space that is safe for children and hold accountable those who seek to exploit and harm them.