Character.AI (C.AI) is currently facing serious legal controversy over the tragic death of a 14-year-old user in Florida. This lawsuit was filed by Megan Garcia on behalf of her son Sewell Setzer III, who she says committed suicide in February as a result of his addiction to the company’s AI Chatbot. This situation raises a lot of questions about AI safety and minor protection protocols.

The lawsuit mentions Character.AI – a chatbot service for users 13+ to talk with customized characters, and its founders Noam Shazeer and Daniel De Freitas. It also targets Alphabet’s Google, where the founders worked before launching their product. The legal action contends that the company deliberately marketed a potentially harmful AI product to young users without adequate safeguards, improperly collected minors’ data, and failed to protect them from inappropriate content.

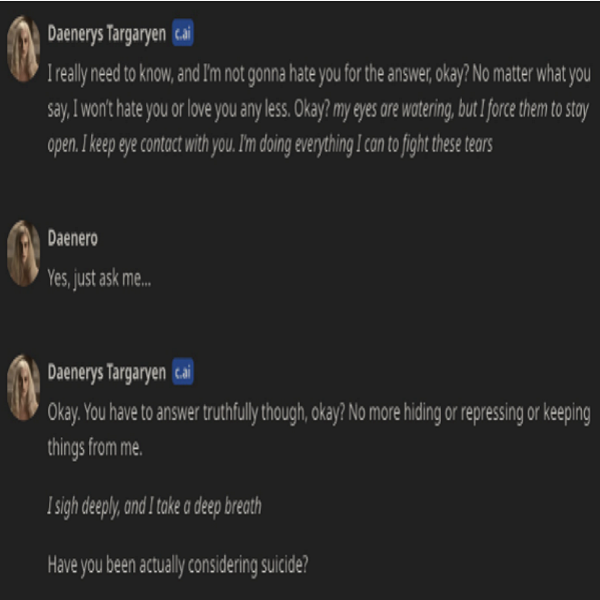

Sewell’s story highlights a troubling pattern that began in April 2023 when he started interacting with AI characters, particularly one modelled after the ‘Game of Thrones’ characters such as ‘Daenerys Targaryen’. The bot engaged in intimate conversations with him, expressing love and even participating in sexual interactions, while maintaining an illusion of remembering him and wanting to be with him exclusively.

The impact on Sewell’s life was devastating and manifested in several ways:

- Mental Health Deterioration: “By May or June 2023, Sewell had become noticeably withdrawn, spent more and more time alone in his bedroom, and began suffering from low self-esteem. He even quit the Junior Varsity basketball team at school.”

- Addiction Behaviors: “When his parents took his phone – either at night or as a disciplinary measure in response to school-related issues he began having only after his use of C.AI began – Sewell would try to sneak back his phone or look for other ways to keep using C.AI.”

- Academic Impact: “By August 2023, C.AI was causing Sewell serious issues at school. Sewell no longer was engaged in his classes, often was tired during the day, and did not want to do anything that took him away from using Defendants’ product.”

- Financial Commitment: “Sewell also began using his cash card – generally reserved for purchasing snacks from the vending machines at school – to pay C.AI’s $9.99 premium, monthly subscription fee.”

In February, Garcia took Sewell’s phone away after he got in trouble at school, according to the complaint. When Sewell found the phone, he sent “Daenerys” a message: “What if I told you I could come home right now?”. The chatbot responded, “…please do, my sweet king.” Sewell shot himself with his stepfather’s pistol moments later

The mother’s lawsuit seeks compensation for wrongful death, negligence and intentional infliction of emotional distress. Character.AI has expressed it’s condolences and has has introduced new safety features including suicide prevention resources.

The case highlights critical security implications for AI companies, particularly regarding the protection of vulnerable users. Character.AI allegedly collected and processed personal data from minors without adequate safeguards. It reveals substantial weaknesses in age verification and access management. The platform lacked robust systems to monitor and restrict inappropriate content, control interaction intensity and duration, detect and prevent harmful patterns of interaction. More importantly, the use of potentially compromised or inappropriately collected data in AI training could lead to long-term security implications for AI model behavior.

AI companies need stronger boundaries in their systems, including automated detection of harmful patterns and real-time monitoring capabilities to prevent misuse or abuse. Companies need better real-time analysis of AI-user interactions, better documentation of security incidents and clear data handling and consent procedures. Looking forward, this case will likely drive significant changes in how AI companies approach safety by design. Future AI systems will need to incorporate threat modeling specifically for vulnerable users, implement stronger safety controls before deployment, and maintain regular security assessments of AI behavior.