Cyber criminals increasingly target Artificial Intelligence (AI) tools and also seek ways to carry out AI-powered attacks. However, cyber security researchers are working diligently to discover and mitigate these attacks before they cause significant security breaches. This is exactly what researchers at Palo Alto Networks did when they uncovered critical vulnerabilities in Google’s Vertex AI platform. These unknown vulnerabilities reveal how AI tools could become an entry point for cyber criminals and expose the organization to serious security risks.

Vertex AI is a machine learning (ML) platform used for training and deploying ML models and AI applications, and customizing large language models (LLMs) for use in AI-powered applications. The research exposes two significant security flaws that could allow attackers to compromise entire AI infrastructure.

The two vulnerabilities in the Vertex AI platform:

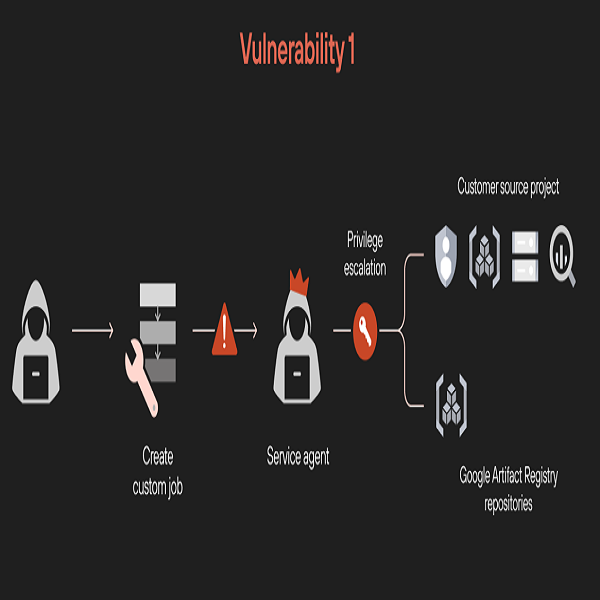

- Privilege escalation via custom job: The first vulnerability involves a privilege escalation technique through custom job features. This flaw allows unauthorized users to gain access to restricted data services within a project. It means that a malicious actor could potentially view and manipulate sensitive information far beyond their original permissions, causing a critical security breach.

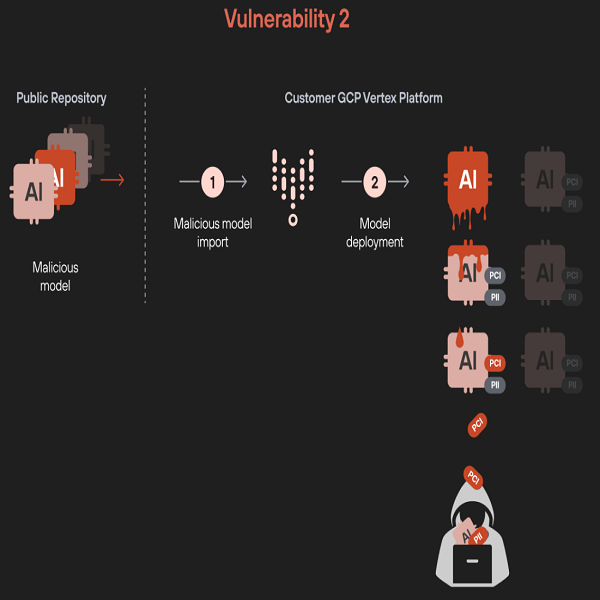

- Model exfiltration via malicious model: The second vulnerability is even more concerning. The researchers demonstrated how a single malicious model could be used to steal other machine learning models within the same environment. This poses a serious potential proprietary and sensitive data exfiltration attack. For instance, an expert may import a legitimate model from a public repository, not realizing that they are introducing a trojan into their organization’s AI infrastructure.

In practical terms, an attacker could upload a poisoned model that, once deployed, steals every other machine learning and large language model in the project. This can cause serious implications as proprietary AI models, sensitive training data, and confidential organizational information could be compromised with a single malicious upload.

The research highlights a critical vulnerability in how AI platforms manage model deployments. It demonstrates that what might seem like a harmless permission to upload or deploy a model could lead to the theft of an organization’s AI intellectual property. Organizations should therefore exercise extreme caution and best security protocols when dealing with AI.

The experts recommend strict separation between development and production environments. This separation reduces the risk of an attacker accessing potentially insecure models before they are fully vetted. Developing a comprehensive model validation processes, and limiting model deployment permissions to limited individuals.

Palo Alto researchers shared their findings with Google, and Google has since implemented fixes for these specific issues on the Google Cloud Platform (GCP). As AI becomes increasingly sophisticated, security cannot be over emphasized. These vulnerabilities serve as a reminder that powerful innovation designed for good can cause security challenges. Organizations and individuals must always remain alert and continuously protect their assets leveraging AI and best security practices.