Apple officially stepped into the Artificial Intelligence market with the announcement of its Apple Intelligence in June. It introduced the Private Cloud Compute (PCC) system, designed specifically for private AI processing. By using the PCC, Apple Intelligence could handle complex tasks, all while maintaining privacy and security.

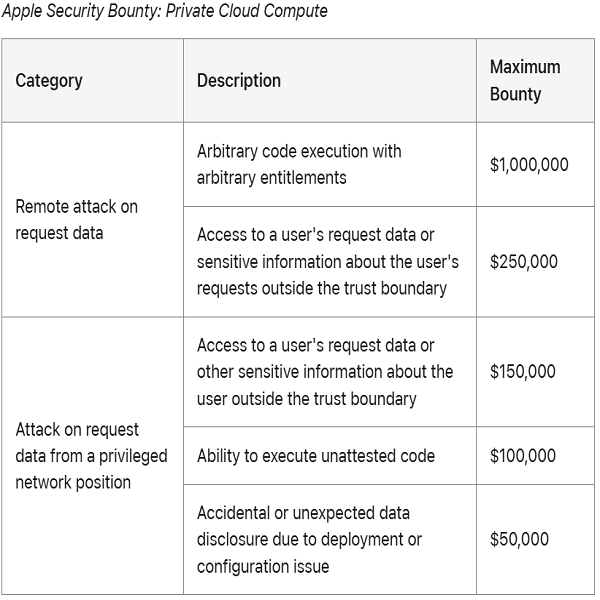

Apple is recently making waves in the AI world with a bold move. They are offering up to $1 million to anyone who can find security flaws in their new Private Cloud Compute system. This means that anyone who can break into their system through a vulnerability will be rewarded.

This is not the first time Apple is letting its code be inspected. After the introduction of its PPC, it allowed security and privacy researchers to check and verify the end-to-end security and privacy it had promised. Weeks later, they also provided third-party auditors and selected security researchers early access to the resources they created to enable this inspection, including the PCC Virtual Research Environment (VRE).

In this recent announcement, Apple included the PCC into its bug bounty program. In a blog post, they outlined the amount that can be earned by a researcher based on different categories:

They also included a PCC Security Guide, which contains technical details of how PCC works and how it is designed to withstand different types of cyberattacks. They’re particularly interested in three main types of potential problems:

- Accidental data disclosure: vulnerabilities leading to unintended data exposure due to configuration flaws or system design issues.

- External compromise from user requests: vulnerabilities enabling external actors to exploit user requests to gain unauthorised access to PCC.

- Physical or internal access: vulnerabilities where access to internal interfaces enables a compromise of the system.

Apple will also consider any security issue that has a significant impact to PCC for an Apple Security Bounty reward, even if it doesn’t match a published category. It aims to provide verifiable transparency, which will set it apart from other server-based AI approaches. This isn’t just for bragging rights – it’s a serious security check.

Instead of just saying “trust us,” they’re inviting experts to verify their security claims. In a world where AI is integrated into almost everything we do, this transparency-first approach could help Apple in trying to set a new standard for privacy protection. It also serves as an example for tech companies, especially those who have been called out for not ensuring proper privacy and security of their user data. With Apple Intelligence set to roll out this week, all eyes are on the company to proof their claims.