With the advancement in AI technology, many gadgets are beginning to look like something from a James Bond movie. The Ray Ban Meta Smart Glasses is an iconic device driven by AI, which lets users capture and share photos, make video calls and livestream, make calls using voice commands and control settings using the Meta View App. These smart glasses have incredible features that have made them impressive but have also raised eyebrows around user privacy and security.

A user on YCombinator stated, “Gadgets were more fun before everything felt like a data miner.” There are many tech enthusiasts who agree with this statement and are concerned about the privacy implications and security risks of AI-driven devices. The core issue is how tech companies handle user data.

It has become a common practice for these companies such as SnapChat, Meta, LinkedIn to train their AI using user-generated data, often without explicit consent. In an initial interview with TechCrunch, Meta was questioned about their plans to use images from Ray-Ban Meta users for AI training. Anuj Kumar, a senior director working on AI wearables at Meta and spokesperson Mimi Huggins both could not give an appropriate response.

Later clarifications from Meta revealed a crucial detail: while they don’t use photos or videos captured on Ray-Ban Meta for AI training by default, this changes once users engage with AI features. According to Meta’s AI Terms of Service and Privacy Policies, using any AI feature essentially gives them permission to use your data for AI training. This means if a user doesn’t want their data used for training, they simply should not use Meta’s multimodal AI features in the first place. This can be a frustrating choice for users as they’ll have to forfeit privacy so as to enjoy the benefits of AI-driven features.

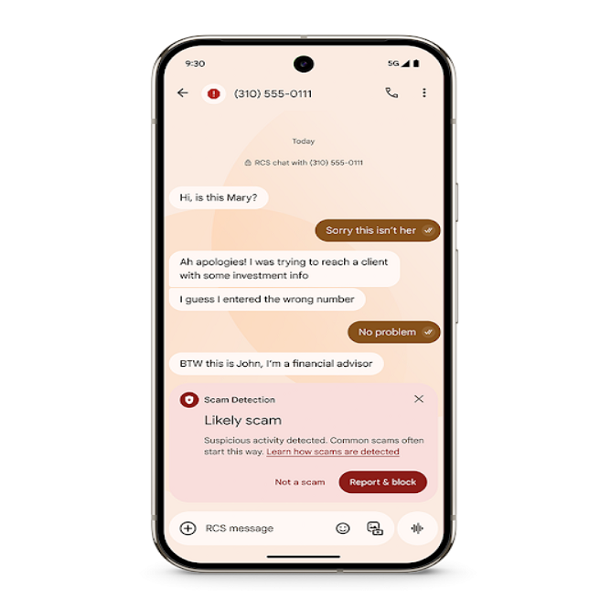

Asides user-generated data being used for AI training, a recent demonstration by Harvard students AnhPhu Nguyen and Caine Ardayfio revealed a more alarming possibility. They showed how smart glasses similar to Meta’s could be modified to use facial recognition technology for identifying random and unsuspecting persons and gathering their personal information. Their system could scan faces, search the internet for additional photos, names, phone numbers, addresses, and even information about relatives, gathering data from various sources including online articles and voter registration databases.

While Meta provides guidelines for responsible use of their smart glasses, including respecting other people’s privacy, and avoiding harmful activities like harassment or capturing sensitive data, the potential for misuse remains significant. In the hands of malicious actors, such technology could become a powerful tool for harassment, identity theft, stalking, or other cybercrimes.

Modern gadgets offer fascinating capabilities and convenience, but these benefits often come with hidden costs to privacy. Although various tech companies have been charged to pay fines by regulators or completely banned over infringing on their user privacy, more security measures should be provided so that users are not forced to choose between enjoying AI features and maintaining control over their personal information.