X (formerly known as Twitter) has agreed to cease using personal data from European Union (EU) users to train its AI models after a legal tussle with Ireland’s Data Protection Commission (DPC). This case highlights the escalating issue between AI innovation and privacy regulations, and what’s at stake for tech companies globally.

Why Ireland’s Data Regulator Targeted X

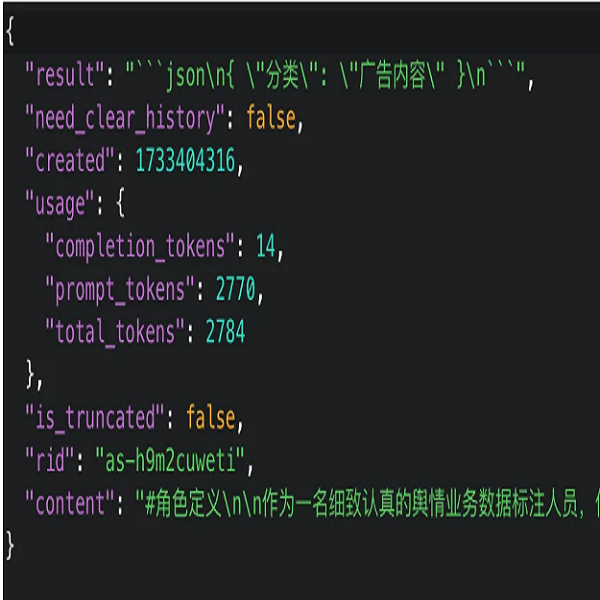

In early 2024, X began using personal data from EU users to train Grok AI. This included everything from tweets to other forms of personal information. The problem was that X wasn’t getting clear, explicit consent from its users. The DPC, the body that ensures that tech companies follow the EU’s strict General Data Protection Regulation (GDPR), took immediate action with this practice.

The DPC’s concerns were straightforward: companies need explicit consent before harvesting personal data for AI training. By August 2024, Ireland’s data regulator had filed court proceedings, demanding X stop the practice and comply with GDPR guidelines.

Facing mounting legal pressure, X responded by updating its privacy practices. In August 2024, the company announced it would stop using personal data from EU users to train its AI tool, Grok AI. The DPC announced that the legal procedures against X had concluded as X committed to permanently stop collecting personal data from users of the EU to develop Grok AI.

Key Takeaways for Cybersecurity Professionals

- If you’re handling user data, especially in regions governed by laws like the GDPR, you need to follow strict rules about consent and transparency. Data privacy isn’t optional, and regulatory bodies are ready to enforce compliance. Cybersecurity professionals must ensure that AI development respects user privacy to avoid legal battles and fines.

- The risks associated with using personal data for AI training go beyond legal violations. Cybersecurity professionals need to prioritize encrypting, anonymizing, and regularly auditing any personal data used for AI purposes. This not only mitigates risks but also ensures compliance with privacy regulations.

- Other European regulators, like the European Data Protection Board (EDPB), are expected to step in and enforce these practices consistently across the region. As AI evolves, regulatory scrutiny will only increase. Staying compliant from the start ensures you avoid both legal drawbacks and reputational damage.

- The fallout from X’s case has far-reaching implications. It shows that regulators are serious about reining in the wild west of AI development. For cybersecurity professionals, this is clear. Privacy and AI governance need to be integrated into your data protection strategies. This will likely be the norm moving forward.

Conclusion

Ireland’s DPC just set a significant precedent in AI governance. With the European Data Protection Board now reviewing the case, we can expect more standardized rules across the EU on how tech companies can (and cannot) use personal data for AI development.

For now, X’s decision to halt personal data usage is a victory for data privacy advocates. But it’s also a stark reminder for companies around the world, AI innovation must go hand-in-hand with ethical data practices.