U.S. authorities have a Russian government-backed campaign that used an AI-powered bot farm to spread disinformation in the United States and abroad. This campaign used nearly 1,000 AI-generated bots on X (formerly Twitter) to act like American users and spread pro-Russian messages about the Ukraine conflict.

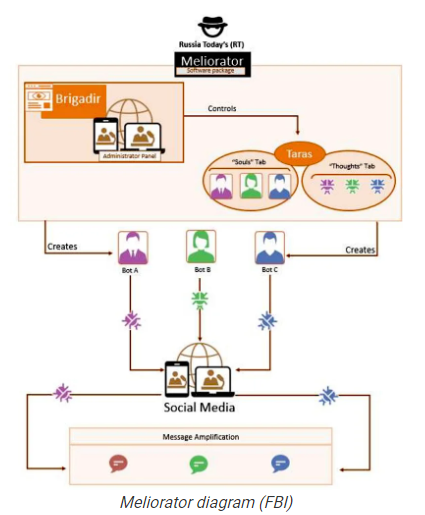

A deputy editor from RT (formerly Russia Today), a Kremlin-owned media outlet, led this operation. They did this alongside alongside a Russian FSB officer. The bots used advanced AI named “Meliorator” to push Russian propaganda, making it seem like many Americans supported these views. Meliorator was designed to use authentic-looking social media accounts, posing as real people, to spread disinformation.

The Justice Department quickly took action by seizing two websites (mlrtr[.]com and otanmail[.]com) that helped create and manage these bots. They also forced X to give information about these accounts. Additionally, 968 social media accounts that were found to be a part of RT’s bot farm were removed by X, many of which claimed to be from Americans. FBI Director Christopher Wray said this was the first major U.S. action against an AI-powered bot farm from Russia. Additionally, it targeted multiple countries, including the United States, Poland, Germany, the Netherlands, Spain, Ukraine, and Israel.

This campaign shows how AI is influencing public opinion. AI makes creating and managing fake accounts easier, making such operations more effective and harder to spot. Nina Jankowicz, head of the American Sunlight Project, noted that AI has made disinformation efforts smoother, posing new challenges for countermeasures.

The ongoing investigation shows how complicated digital conflict is, even though authorities have not announced any criminal charges yet. The U.S. government’s response shows a strong stance against foreign influence operations, especially those using advanced technology.

This case is a reminder of the evolving threats in today’s world. As AI technology advances, strategies to detect and stop these sophisticated efforts must also improve. This disruption is a crucial step in protecting the integrity of information and maintaining public trust in democratic institutions.

Conclusion

Stopping this AI-powered bot farm is a big step in defending against foreign disinformation campaigns. It shows the need for vigilant and adaptive countermeasures as technology continues to change how misinformation and influence tactics are used.