DARPA’s AI Cyber Challenge aims to speed up the creation of AI-driven cybersecurity tools that can autonomously detect, mitigate, and defend against cyber threats. Typically, this competition brings together researchers, developers, and cybersecurity experts. They work to develop AI systems that handle tasks typically performed by human cybersecurity professionals, but with greater speed and accuracy. The challenge focuses on autonomous detection and response, adaptive learning, scalability, and collaboration between AI systems and human operators.

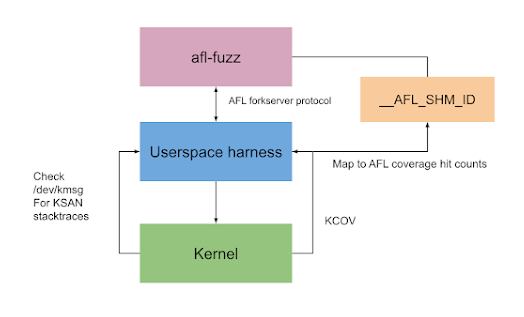

In the AI Cyber Challenge, participants use innovative approaches to AI-powered cyber defense. For example, OSS-Fuzz has identified over 11,000 vulnerabilities across 1,200 projects and is a key tool for competitors. Additionally, teams can use existing toolchains and fuzzing engines like libFuzzer, AFL, and Jazzer for userspace challenges. However, kernel fuzzing presents more difficulties. Consequently, for kernel challenges, teams have explored options like Syzkaller and AFL. They have adapted AFL for kernel challenges using techniques similar to those detailed by Cloudflare. Specifically, this approach uses KCOV and KSAN instrumentation and executes inputs through a userspace harness in QEMU.

Credit: security.googleblog.com

For kernel fuzzing, teams chose AFL and modified it to integrate with AIxCC’s userspace harness. This process involved compiling the kernel with coverage instrumentation and running it in a virtualized environment. The harness collects data on what parts of the code are being tested and looks for issues like memory leaks. It manages problems like file descriptor leaks by either carefully controlling resources or restarting the process. AI helps improve fuzzing by creating initial test inputs based on the harness’s code, making it easier to handle complicated input formats and speed up slow tests. Experiments by Brendan Dolan-Gavitt from NYU illustrate AI’s potential in this domain.

Static analysis, augmented with AI, can reduce false positives and improve bug-finding accuracy. Once vulnerabilities are identified, competitors must produce key evidence, including the culprit commit and expected sanitizer. This involves git history bisection and stack trace analysis. Automated patching remains challenging, often requiring brute force methods like delta debugging to revert problematic commits without breaking functionality. AI can assist by suggesting patches, validating them through crash tests, and iteratively refining the fixes.

DARPA’s AI Cyber Challenge marks a significant advancement in integrating AI into cybersecurity. We expect the innovative approaches developed through this competition to impact the future of cyber defense profoundly. As AI evolves, it will play an increasingly crucial role in protecting digital infrastructure and ensuring national security. Key trends to watch include the development of more sophisticated AI models capable of understanding complex network environments. Integrating AI with other technologies such as blockchain and quantum computing, and establishing global frameworks for AI-driven cybersecurity collaboration.

In conclusion, DARPA is paving the way for a new era of cyber defense by fostering innovation and encouraging the development of advanced AI-driven defense mechanisms. The strategies explored in this competition—from machine learning and behavioral analysis to adversarial machine learning and collaborative defense—highlight the diverse and powerful capabilities of AI in combating cyber threats. As defenders continue to refine and expand these techniques, the future of cybersecurity looks increasingly resilient and adaptive in the face of ever-evolving challenges.