Wiz, a cybersecurity firm, published a report after discovering a critical vulnerability in Replicate, an AI-as-a-service Platform. The vulnerability identified could potentially expose proprietary AI models and sensitive data of customers to malicious actors.

This article will explain the vulnerability, describe how attackers exploit it, and discuss its impacts.

The Vulnerability

This vulnerability can potentially allow unauthorized access to proprietary AI models and critical data of all Replicate users.

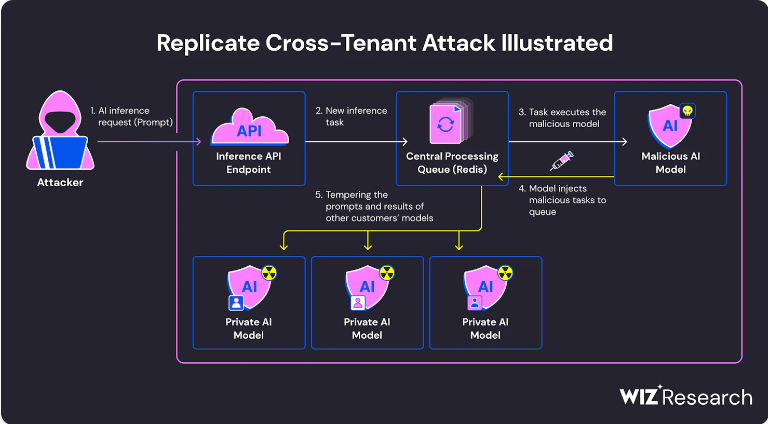

The packaging of AI models, which usually permits arbitrary code execution happens to be a security concern. Malicious actors may use it to launch cross-tenant attacks. Moreover, this feature is essential for flexibility and functionality. In a nutshell, an attacker could introduce a malicious model to obtain unauthorized access to various platform tenants.

How the Vulnerability can be exploited

Wiz created a malicious software container using an open-source tool called Cog that Replicate uses for packaging models. They uploaded this rogue container to Replicate’s system.

Wiz used the malicious container, designed to allow remote code execution, to run arbitrary commands on Replicate’s infrastructure with elevated privileges.

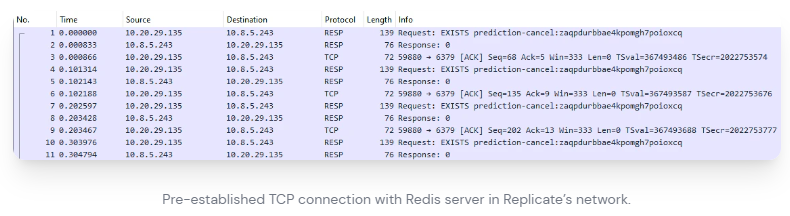

The attack worked by manipulating an established connection to a Redis server used by Replicate’s Kubernetes cluster to manage customer requests.

By inserting rogue tasks through this Redis server, they could interfere with the results of other customers’ models.

Impact of this Vulnerability

This exploit not only threatened the integrity of the AI models hosted on Replicate, but also put proprietary knowledge, sensitive data like personal information, and the accuracy of the AI-generated outputs at risk through potential unauthorized access to private models.

Conclusion

In January 2024, Replicate fixed the vulnerability when Wiz responsibly disclosed it. There’s no proof that this vulnerability was used to compromise customer information in the wild.

Malicious AI models, according to the researchers, pose a serious threat to AI systems, especially for companies that offer AI as a service. Attackers can use these models to launch cross-tenant attacks. Considering the attackers might have access to the many private AI models and apps that the AI-as-a-service providers host, the consequences of such attacks could be disastrous.